In a previous article we got an introduction to Azure Event Grid, if you’re new to Event Grid you should check it out first to familiarise yourself with some basic concepts.

In this article we’ll create an Azure Event Grid topic and subscription and see it in action.

First off, if you want to create a free Azure Account you can do so, then log into the Azure portal.

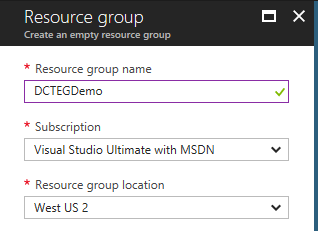

Next go and create a new resource group, Azure Event Grid is currently in preview and only available in selected locations such as West US 2.

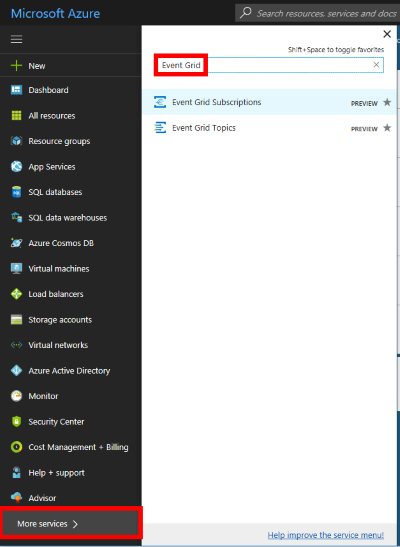

Once the resource group is created, head down to the More services option and search for Event Grid.

There are topics provided by Azure services (such as blob storage ) and there is also the ability to create your own custom topics for custom applications/third parties/etc.

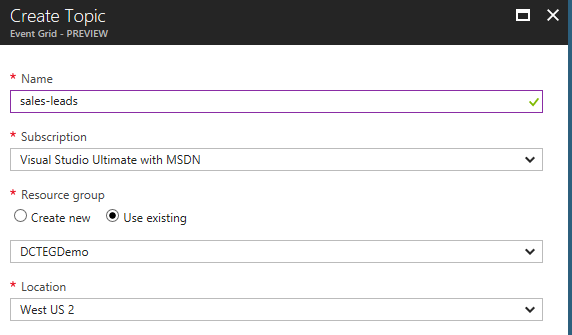

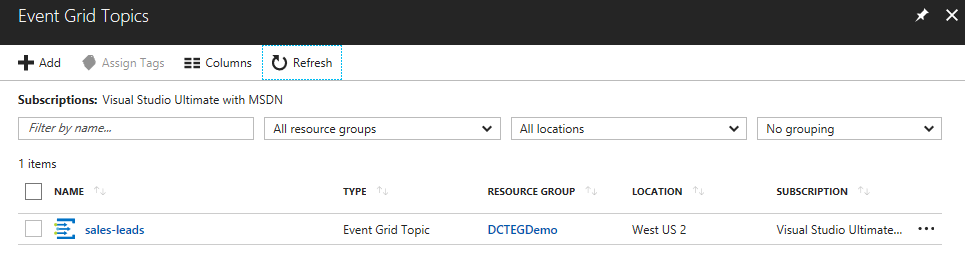

Click Event Grid Topics and this will take you to a list of all your topics. Click the +Add button to begin creation of a custom topic. Give the topic a name sales-leads and choose the resource group created earlier, once again choose West US 2.

Click create, wait for the deployment to complete and hit refresh in the topics list to see your new topic:

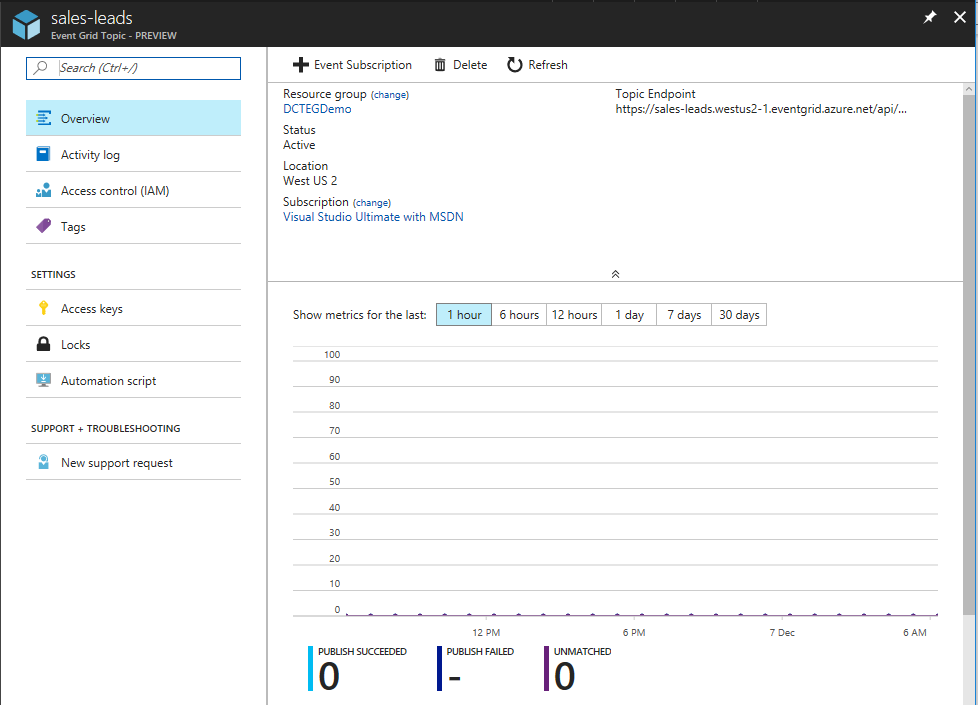

Click on the newly added sales-leads topic, notice the overview showing publish metrics:

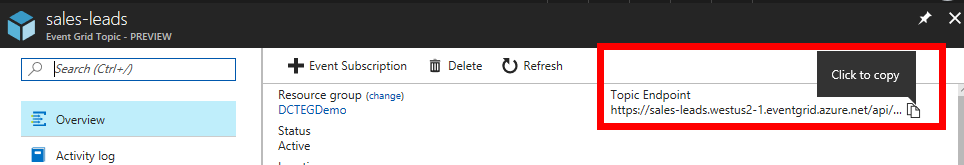

At the top right hover over the Topic Endpoint and click the button to copy this to the clipboard (we’ll use this later):

In this example the copied endpoint is: https://sales-leads.westus2-1.eventgrid.azure.net/api/events

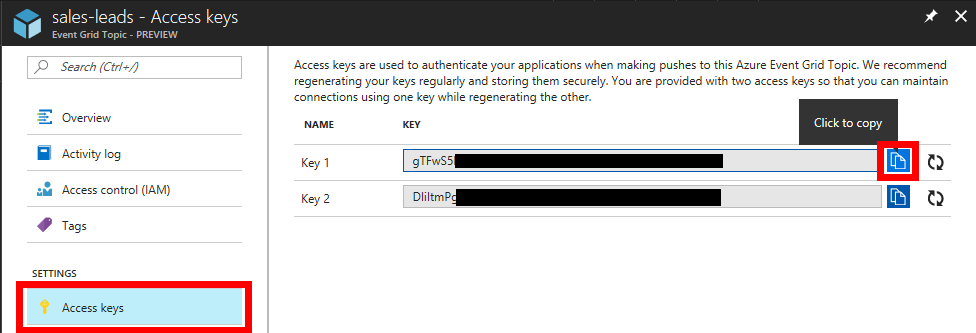

We’ll also need an access key to be able to HTTP POST to this custom topic later, to do this click the Access keys option and copy Key 1 for later use:

Click back on Overview and click the +Event Subscription button:

In this example we’ll create a subscription that will call an external (to Azure) service that will mail a conference brochure to all new sales leads. In this example we are simulating a temporary extension to the sales system for a limited period during the run-up to a sales conference. This is one use case for Azure Event Grid that allows extension of a core system without needing to modify it (assuming that events are being emitted).

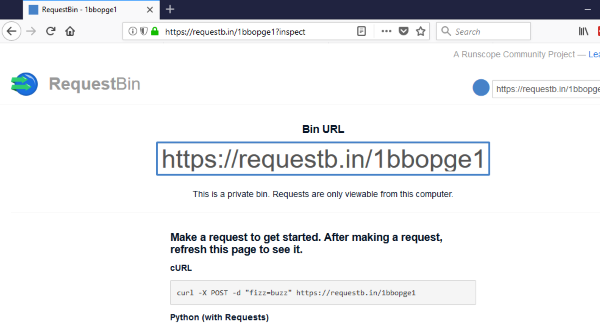

To simulate this external service we’ll use RequestBin which you can learn more about in this article. Once you’ve created your request bin, take a note of the Bin URL.

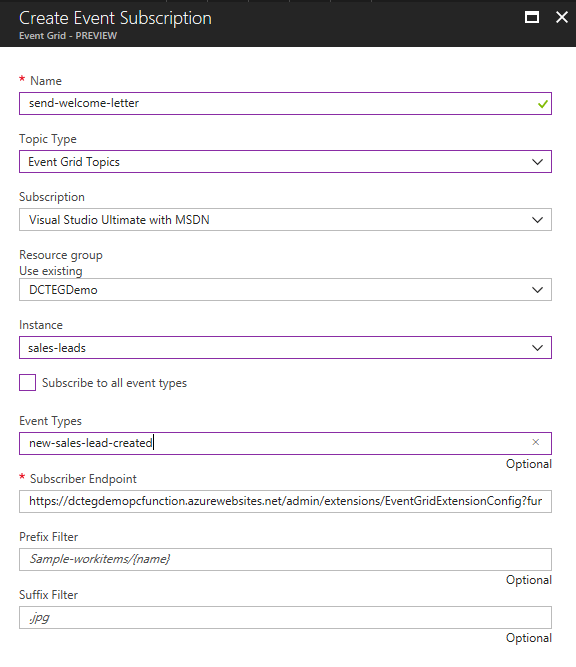

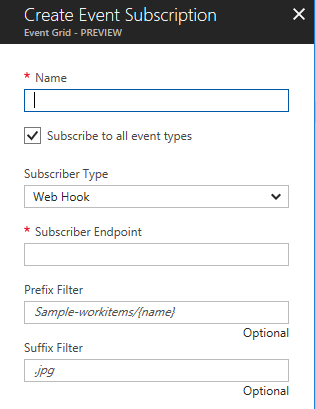

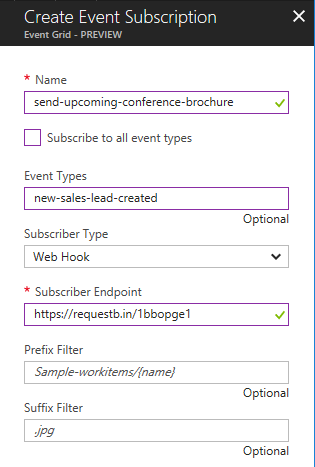

Fill out the new event subscription details:

- Name: send-upcoming-conference-brochure

- Subscribe to all event types: Untick

- Event Types: new-sales-lead-created

- Subscriber endpoint: https://requestb.in/1bbopge1 (this is the RequestBin URL created above)

Click Create.

To recap, there is now a custom topic called sales-leads that we can publish events to at its URL: https://sales-leads.westus2-1.eventgrid.azure.net/api/events. There is also an event subscription set up for this topic but that is limited to only those events published of type new-sales-lead-created. This event subscription uses the Azure Event Grid WebHooks event handler to HTTP push events to the RequestBin URL.

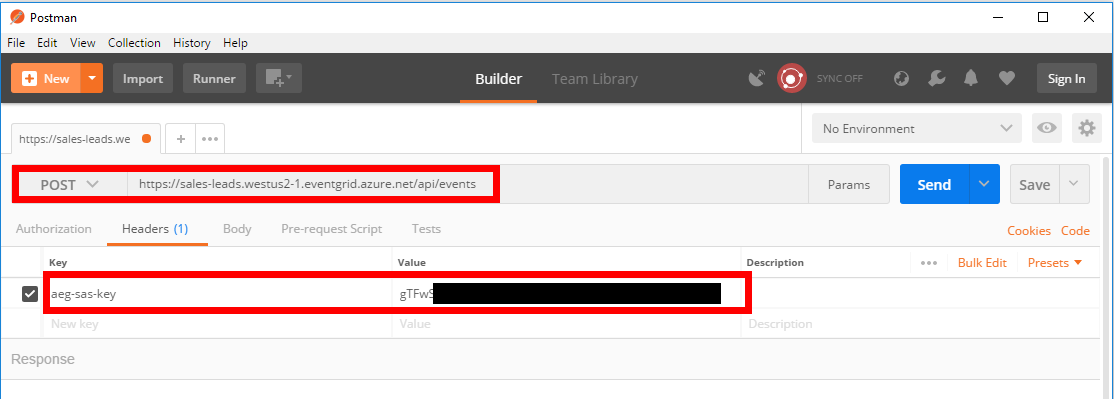

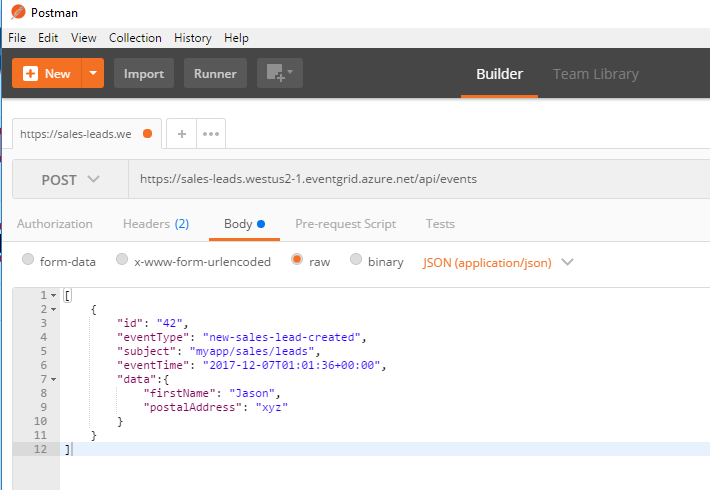

To see this in action, open Postman and select POST and paste the topic URL (https://sales-leads.westus2-1.eventgrid.azure.net/api/events). Add a header called aeg-sas-key and paste in the key that was copied earlier:

The final thing to do is define the event data that we want to publish:

[

{

"id": "42",

"eventType": "new-sales-lead-created",

"subject": "myapp/sales/leads",

"eventTime": "2017-12-07T01:01:36+00:00",

"data":{

"firstName": "Jason",

"postalAddress": "xyz"

}

}

]

And then click Send in Postman. You should get a 200 OK response.

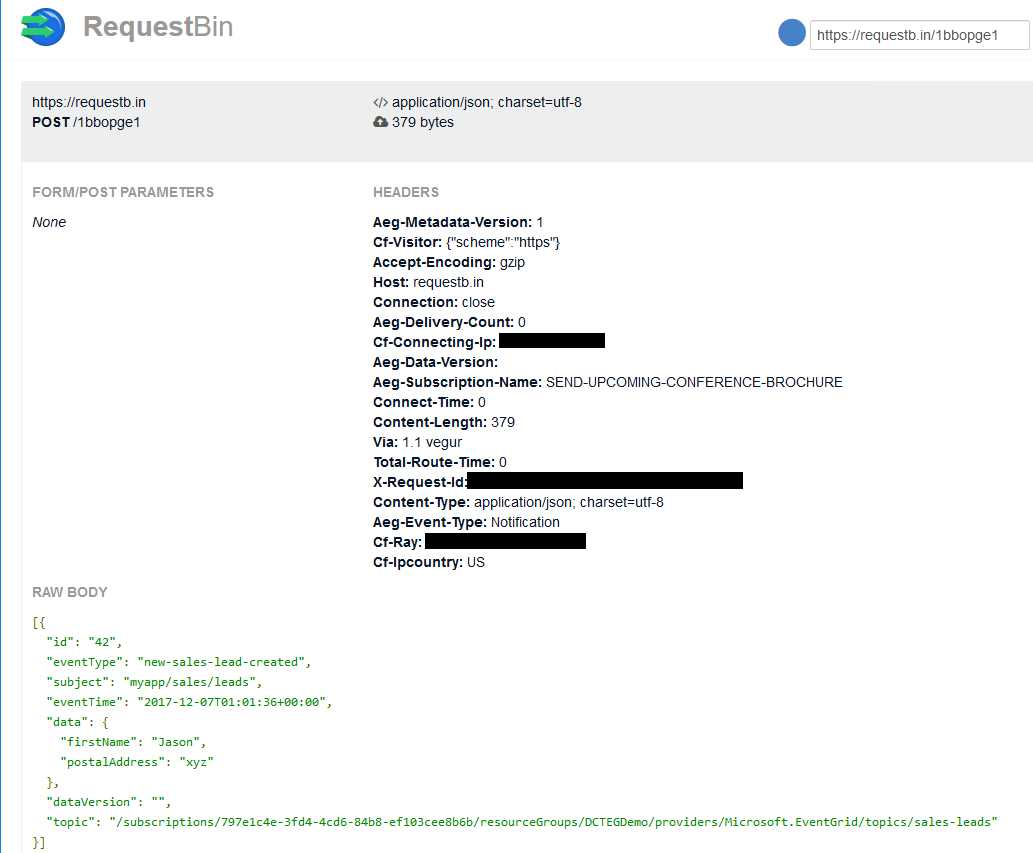

Heading back to the RequestBin window and refreshing the page shows the subscription working and the event being pushed to RequestBin:

Because the event subscription is filtered on an event type of new-sales-lead-created, if we send a different event type from Postman (e.g.: "eventType": "new-sales-lead-rejected",), the subscription won’t activate nor push the event to RequestBin.